Abstract

This Dataset was created for tasks involving sensor fusion and depth camera evaluation and does consist of 3 calibrated stereo and ToF camera views with ground truth depth.Description

This Dataset was created for tasks involving sensor fusion and depth camera evaluation.

It consists of the output of 3 cameras:

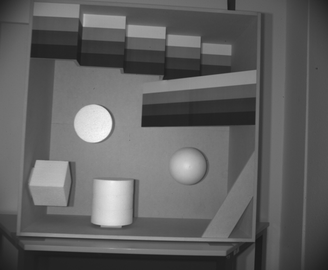

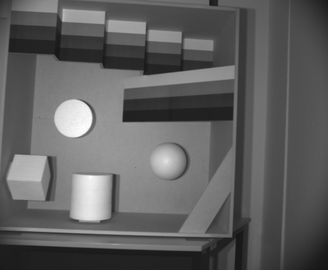

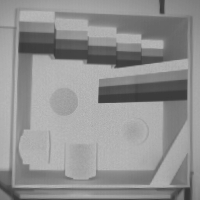

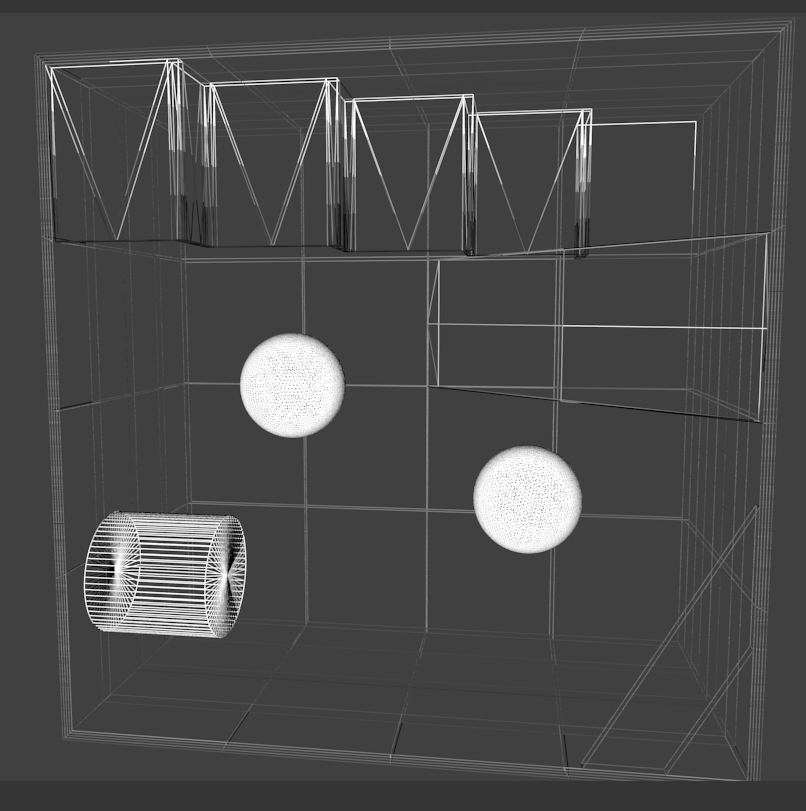

The images show the so-called HCI Targetbox, a 1m x 1m x 0.5m wooden box especially created for testing depth cameras. it containts multiple objects with slanted or curved surfaces which can be challenging for various depth cameras.

Content

The dataset consists of the following parts:

- raw intensity images for each of the 3 cameras

- rectified intensity images for each camera

- ToF radial distance images (raw and rectified without lens distortion)

- ground truth depth and ground truth radial distance for all 3 cameras

- blender 3d file with mesh of the targetbox and 3 virtual cameras corresponding to the real cameras

Comments:

- The focal length and principal point offset for each camera is correctly set in the blender file, but the file format does not allow to save individual pixel resolutions for each camera. These must be set manually (1312x1082 for the stereo cameras, 200x200 for the time-of-flight camera)

- The blender file comes with a pre configured depth output node. Depth maps will be saved in the OpenEXR image format which does support floating-point depth accuracy.