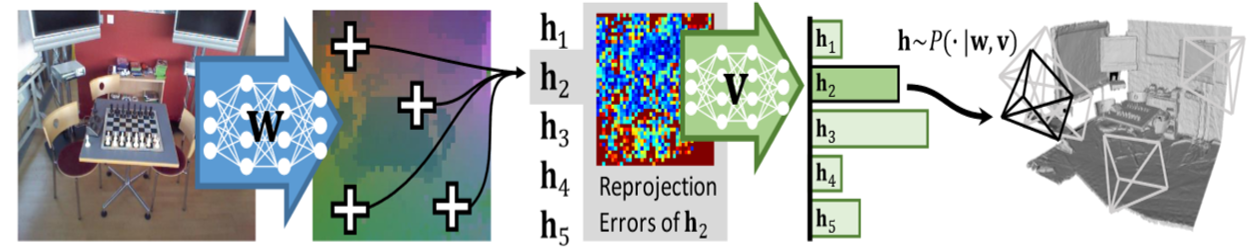

This course covers areas of computer vision which deal with reconstruction of 3D scene. This means, for instance, to recover a 3D scene from a video, or to detect and track a dynamically changing object in the scene. We introduce the underlying principles and methods to solve such tasks, where the best methods are often a combination of deep learning and traditional approaches. To do so, we cover the necessary background knowledge, such as image processing, camera models, deep learning, image formation model, and Kalmann Filters.

Date and Place:

Lecture: Tuesdays from 11-13;

Exercise: Thursdays from 11-13

Mathematikon B, Berliner Str. 43, SR B128

Content:

- Brief introduction to required Machine Learning concepts (Neural Networks, Convolution NN, etc)

- Basic Image processing (Filtering, Bilateral Filter)

- Sparse feature detection and description (points, edges, LIFT) w/ and w/o Neural networks

- Projective Geometry, Epipolar Geometry

- Sparse reconstruction (image matching, image descriptors) w/ and w/o Neural Networks

- Robust matching w/ Neural networks (Differentiable RASANC and related pipelines)

- Camera Localization and SLAM

- Stereo Vision, Dense 3D Reconstruction

- 3D Object detection (End-to-End Trainable Pipelines) w/ and w/o Neural Networks

- 3D Object tracking (6D Pose estimation, Kalman Filter, Particle Filter)

Formalities:

Teaching assistants (main point of contact): Yannick Pauler: yannick.pauler@iwr.uni-heidelberg.de>; Nils Krehl: nils.krehl@stud.uni-heidelberg.de;

Registration: Via Moodle . Important: We plan to offer the course in normal format (in person, not hybrid). We only have 40 slots for participants. First come, first served.

Prerequisite: no prerequisites, but it is recommended to have Machine Learning Background, e.g. Fundamentals of Machine Learning or equivalent

Exam: The default is an oral examination. However, depending on interest a group mini-project may also be offered (as in last years).

Leistungspunkte: 6 LP

Usability: Physics, MSc., Angewandte Informatik, MSc. Scientific Computing

Teaching goals:

The students

- Understand the principles behind estimating 3D Point Clouds and Motion from two or more images. They are able to apply this knowledge to new tasks in the field of 3D reconstruction.

- Understanding the principles of an image formation process and corresponding Geometry. This can be utilized to design new algorithms, for e.g. 3D motion estimation for autonomous driving.

- Understand and implement methods that combine machine learning based methods with classical computer vision based techniques.

- Have studied various state-of-the-art computer vision systems and approaches, and are then able to evaluate and classify new systems and approaches.

- Understand and implement different approaches for object tracking .